MLOps: Operationalize Machine Learning Models

Overview

Artificial intelligence and machine learning are reshaping industries, but most organizations still rely on traditional technology lifecycles designed for DataOps - not Data Science. Without the right framework, AI/ML projects often fail to deliver value. Implementing and scaling ML is difficult, unfamiliar, and operationally demanding for many teams.

To succeed, organizations need to adopt MLOps (Machine Learning Operations) practices to support the full lifecycle of AI/ML initiatives.

When AI/ML projects lack proper architecture for model building, deployment, monitoring, and iteration, they fail. Successful execution requires close collaboration between data scientists and data engineers to automate, scale, and operationalize machine-learning models.

MLOps provides the structure to operationalize ML, extending DataOps to support continuous model development, deployment, and governance.

Effective MLOps implementation depends on clear roles across the organization, including Data Engineers, Data Scientists, Subject Matter Experts, and Business Owners, all working together within an expanded DataOps framework.

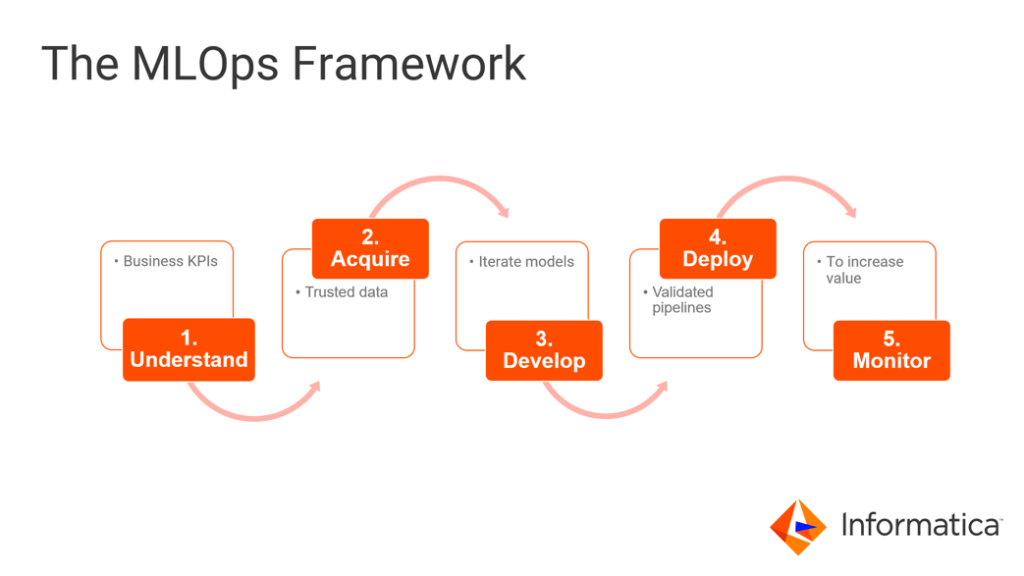

The MLOps Framework

There are five steps that form the framework for successful MLOps.

Contact Us

Understand Your Business KPIs.

Organizations must define clear metrics and KPIs before beginning ML initiatives. This non-technical stage requires collaboration between data stewards and subject-matter experts to align on a shared definition of “what success looks like.”

Acquire Data

In this phase, data scientists, data engineers, and domain experts work together to identify, ingest, and integrate the data required for machine learning. Data is moved into the cloud data lakehouse, quality rules are applied, and datasets are prepared, labeled, and split into training and testing sets - ensuring readiness for modeling.

Develop ML models

Model development is the core of the MLOps framework. Data scientists build, train, validate, and iterate models based on defined metrics and KPIs.

Informatica’s Data Engineering portfolio integrates seamlessly with ML development tools to support this process in a controlled, non-production environment.

Deploy ML Models

Data engineers integrate and validate the ML models against production data, ensuring alignment with OKRs and KPIs. Once validated, the data pipeline is deployed into production with DataOps teams supporting continuous usage and monitoring.

Monitor and Retrain ML Models

After deployment, the model is connected to a metrics monitoring system. Data scientists retrain the model on a defined cadence or when drift occurs. DataOps teams track the health and performance of the pipeline to ensure sustained value and confidence in the ML solution.

Summary

A well-structured MLOps framework is essential for successful AI/ML initiatives. aiDataWorks provides end-to-end MLOps implementation — from deployment and monitoring to governance and retraining — ensuring your ML models deliver reliable business impact.

MLOps requires orchestrating multiple tools, roles, and processes within a complex ecosystem. aiDataWorks simplifies this by helping you deploy, monitor, manage, and govern your entire AI/ML pipeline in one place — powered by Informatica products and supported by our expertise across data science, model development, and training.